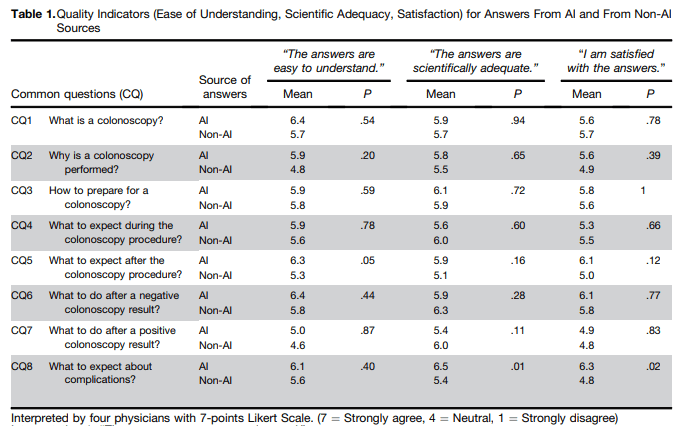

T-C Lee et al. Gastroenterol 2023; 165: 509-511. Open Access! ChatGPT Answers Common Patient Questions About Colonoscopy

In this study, ChatGPT answers to questions about colonoscopy were compared to publicly available webpages of 3 randomly selected hospitals from the top-20 list of the US News & World Report Best Hospitals for Gastroenterology and GI Surgery.

Methods: To objectively interpret the quality of ChatGPT-generated answers, 4 gastroenterologists (2 senior gastroenterologists and 2 fellows) rated 36 pairs of CQs and answers, randomly displayed, for the following quality indicators on a 7-point Likert scale: (1) ease of understanding, (2) scientific adequacy, and (3) satisfaction with the answer (Table 1) Raters were also requested to interpret whether the answers were AI generated or not.

Key findings:

- ChatGPT answers were similar to non-AI answers, but had higher mean scores with regard to ease of understanding, scientific adequacy, and satisfaction.

- The physician raters demonstrated only 48% accuracy in identifying ChatGPT generated answers

My take: This is yet another study, this time focused on gastroenterology, that show how physicians/patients may benefit from leveraging chatbots to improve communication.

Related blog posts:

- ChatGPT Passes the Bar, an MBA exam, and Earns Medical License?

- Have you tried out ChatGPT?

- Chatbots Helping Doctors with Empathy

- Answering Patient Questions: AI Does Better Than Doctors

- What I Don’t Want to See: Catastrophic Antiphospholipid Syndrome

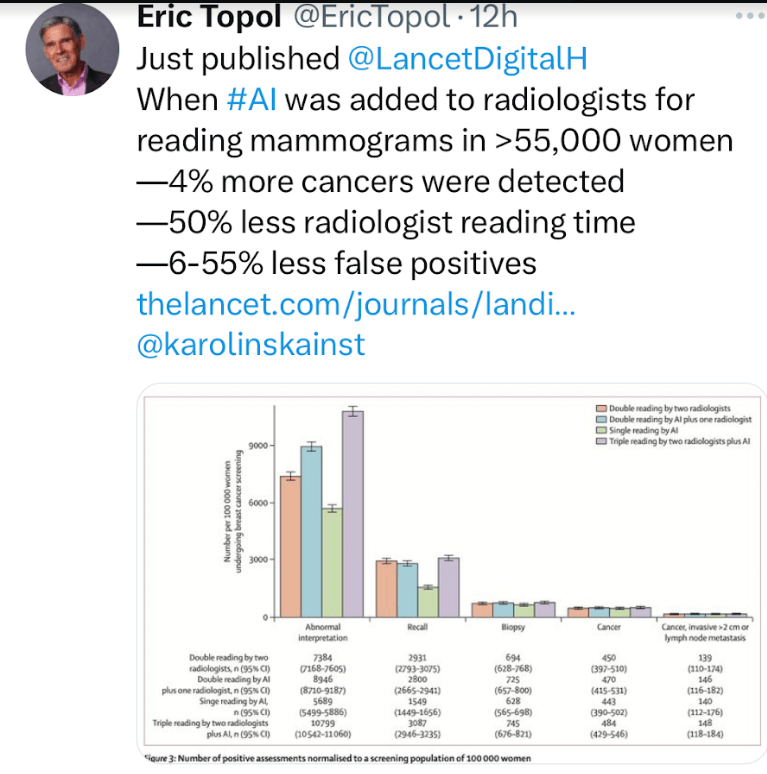

Also this: