Recently, Dr. Jennifer Lee gave our group an excellent update on artificial intelligence (AI) for pediatric gastroenterology. My notes below may contain errors in transcription and in omission.

- AI is ubiquitous -it helps you login into your phone, helps with traffic apps, filters spam from email, and even edits Bowel Sounds (gets rid of the ‘umms’)

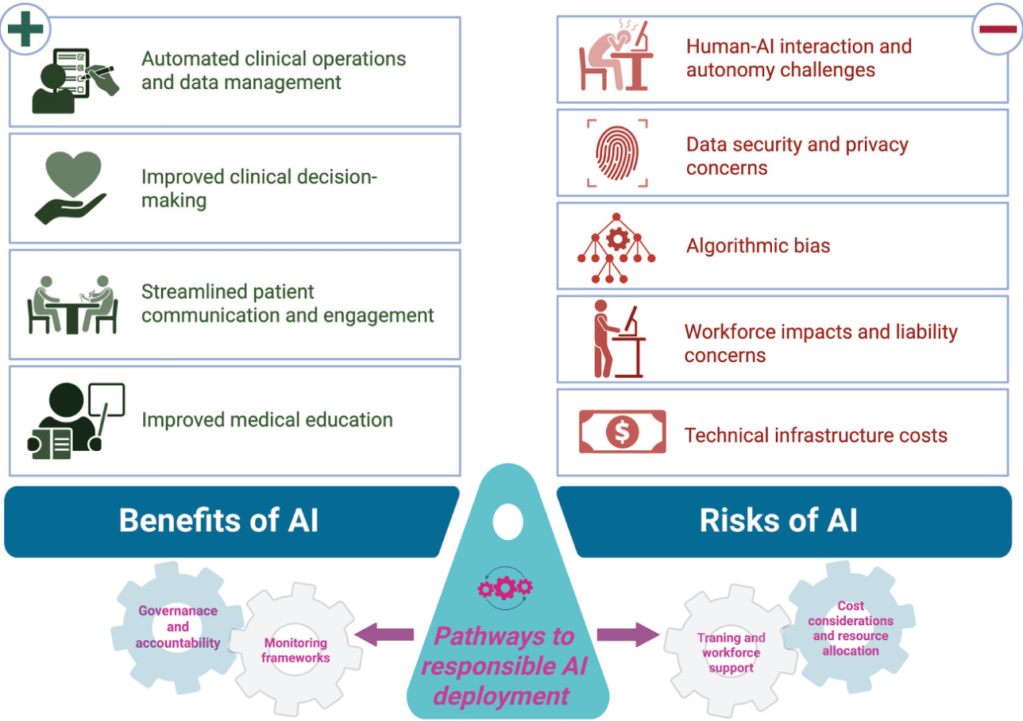

- AI can help and AI can harm

- Dr. Lee thinks that AI is not going to replace doctors and may help doctors in their clinical work

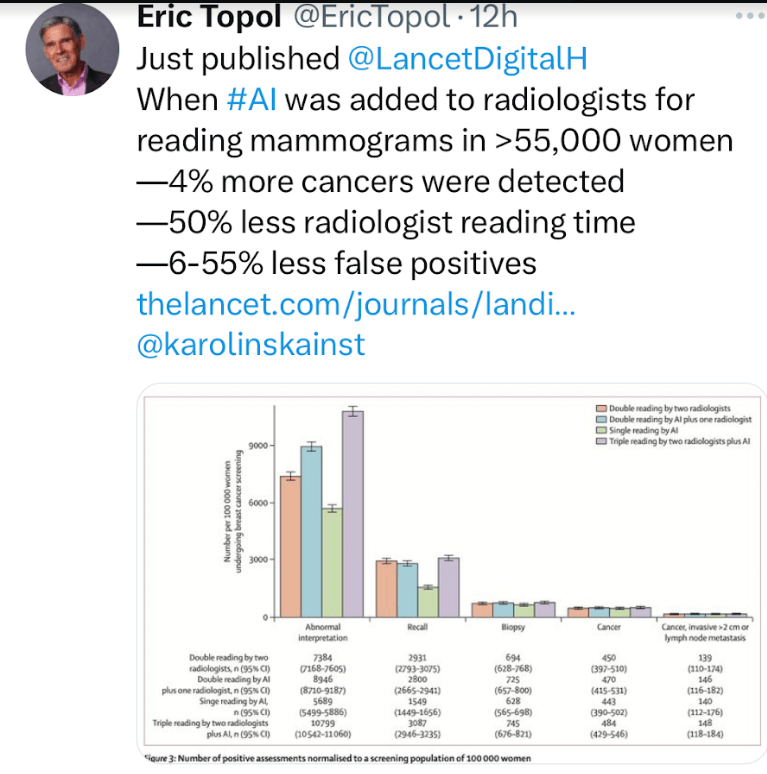

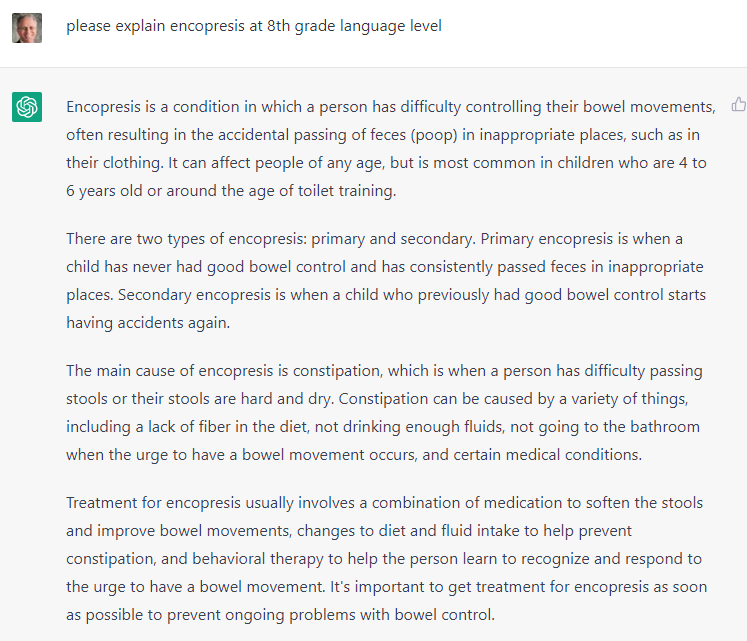

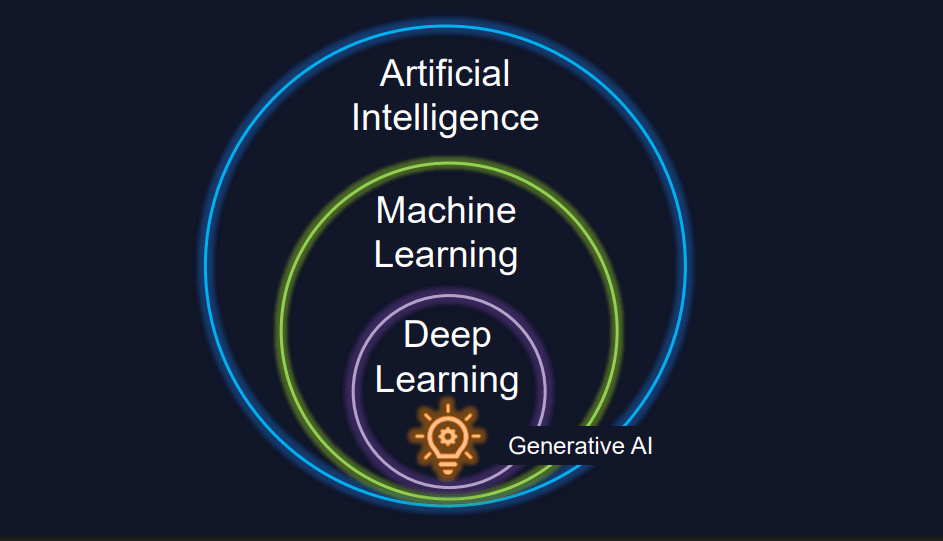

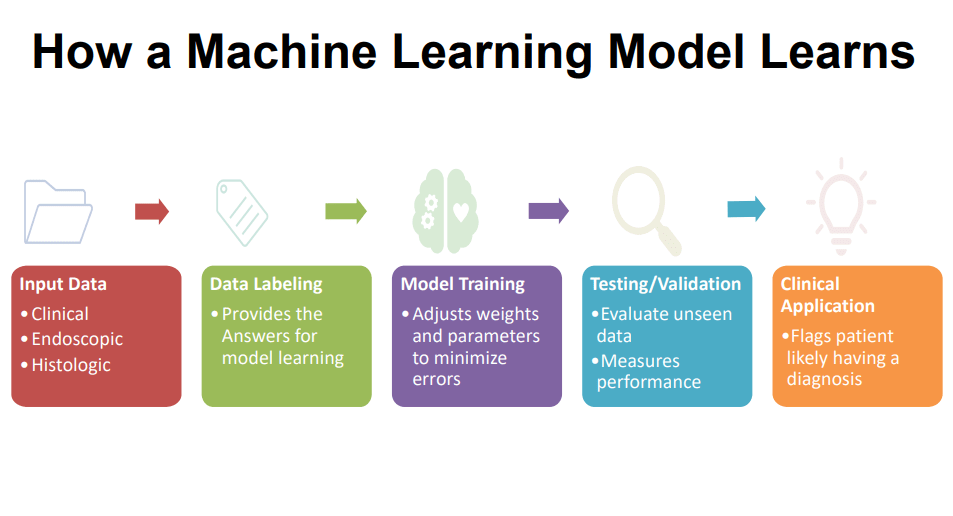

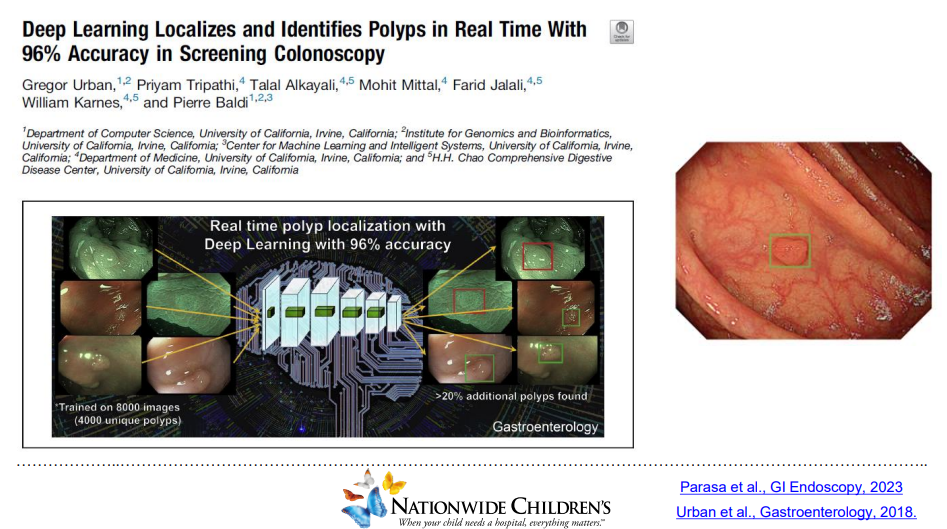

- AI is teaching computers to think and predict problems. This can include analyzing radiology images, endoscopic findings (eg. polyps), interpreting EKGs, help with voice recognition, and scribe office visits (still in early stages)

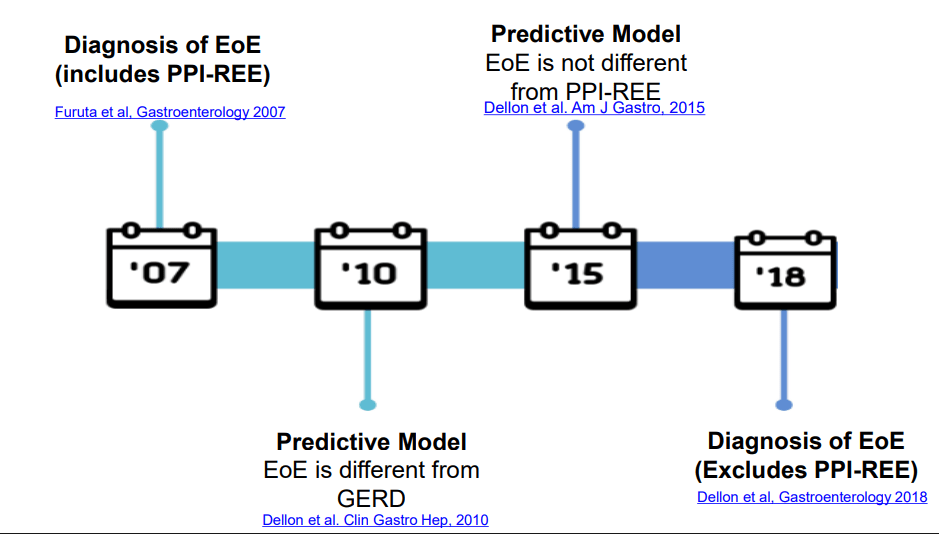

- For EoE, it was hypothesized that PPI-REE was different than EoE. However, it turned out that no significant differences were found. Thus, diagnosis of EoE no longer requires exclusion of EoE. (Related blog posts: Do We Still Need PPI-REE?, Updated Consensus Guidelines for Eosinophilic Esophagitis)

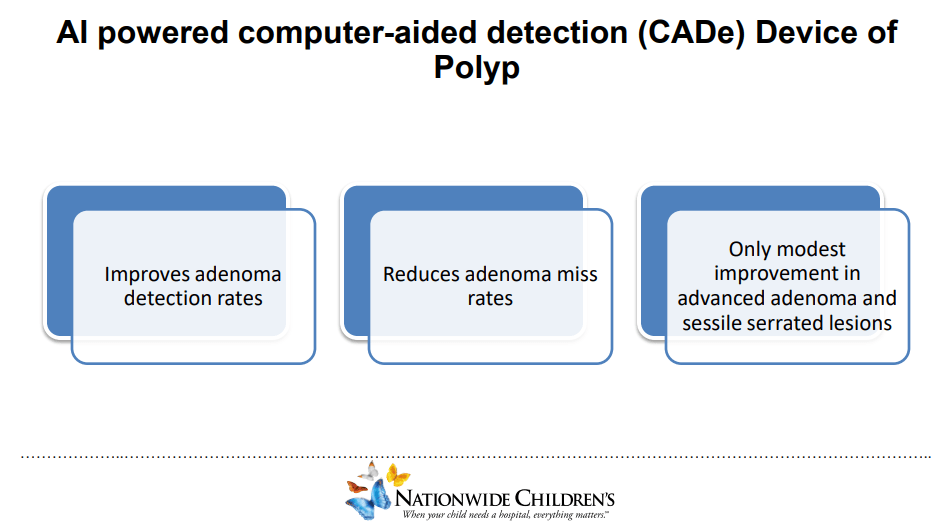

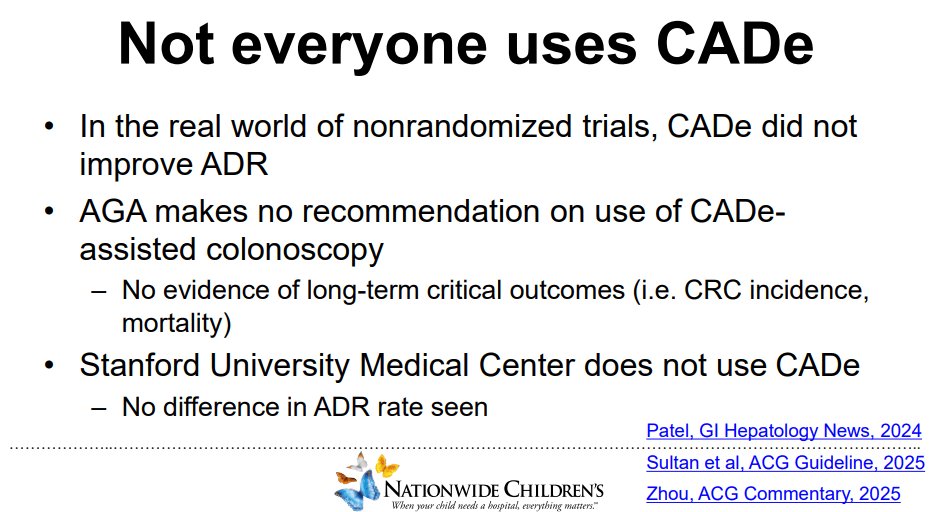

- For colonoscopy, AI may aid polyp detection but whether this is clinically meaningful is unclear

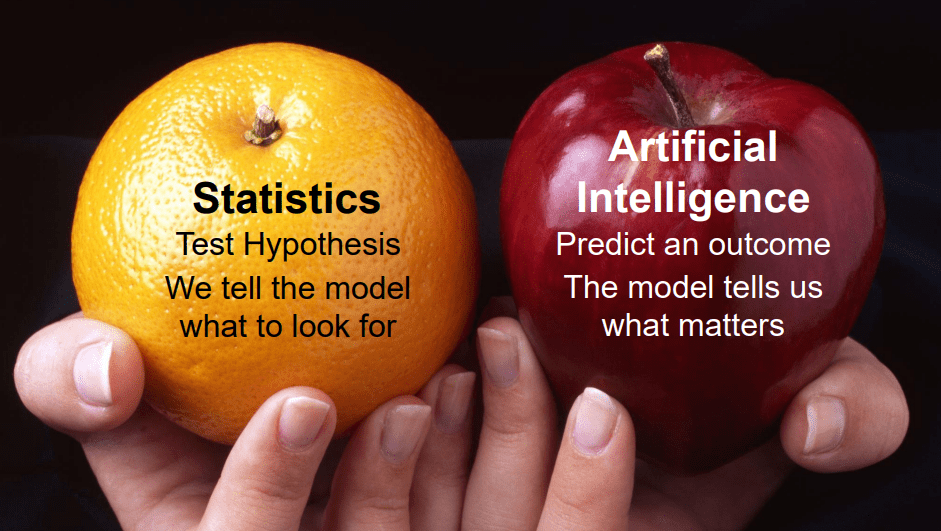

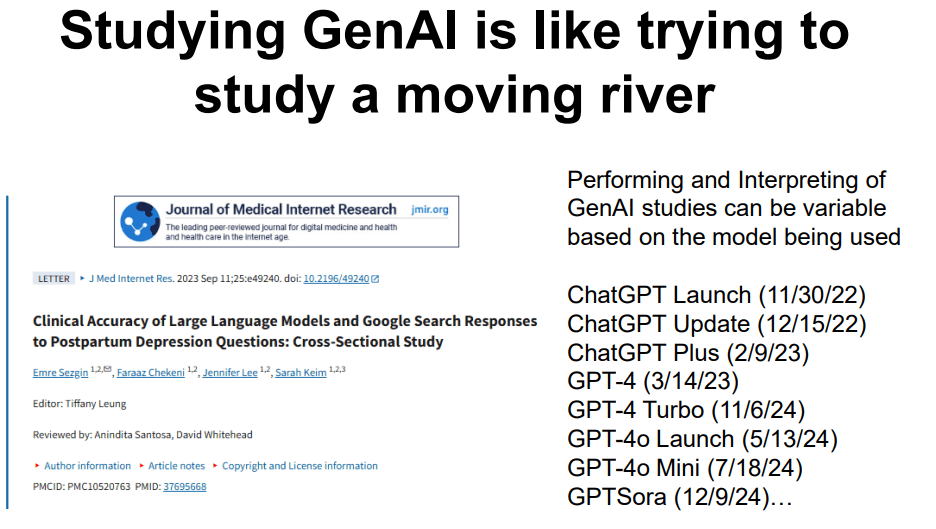

- With more complex analysis, AI is less transparent

- AI algorithms can increase bias

- Reliance on AI could lead to skill deterioration. MIT did a study showing less brain activity when using ChatGPT

- Generative AI can create a summary of a patient chart. EHRs are partnering with AI

- Agentic AI is when AI is set up to act autonomously like reminding patients to get vaccines, reminding to make appointments, or helping schedule appointments

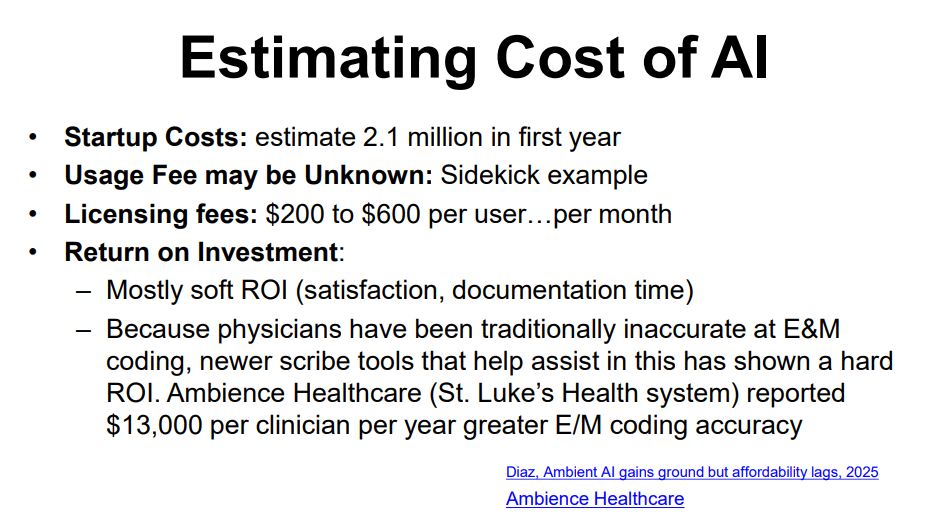

- AI in the clinic and hospital may help reduce documentation burden, improve satisfaction and improve safety for patients

- AI does have a problem of hallucination (‘making stuff up’) (my comment: so can people). Case report of man admitted to the hospital after following ChatGPT advice in substituting sodium bromide to reduce salt intake (Eichenberger et al. Annal Internal Medicine, 2025. A Case of Bromism Influenced by Use of Artificial Intelligence)

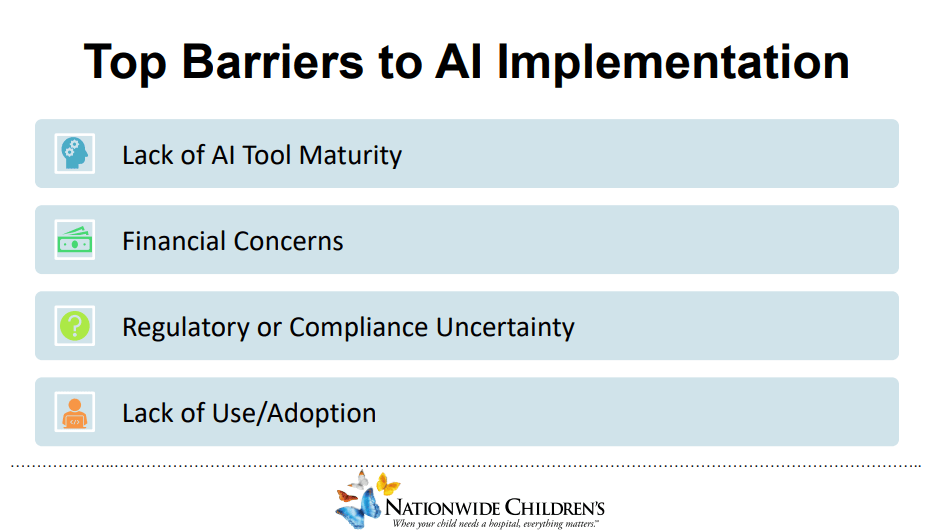

- AI tools are still in early stages; however, ChatGPT uptake has been much quicker than previous internet tools

Related blog posts:

- AI for GI

- Artificial Intelligence in the Endoscopy Suite

- Rising Scientific Fraud: Threats to Research Integrity Plus One

- The Future of Medicine: AI’s Role vs Human Judgment

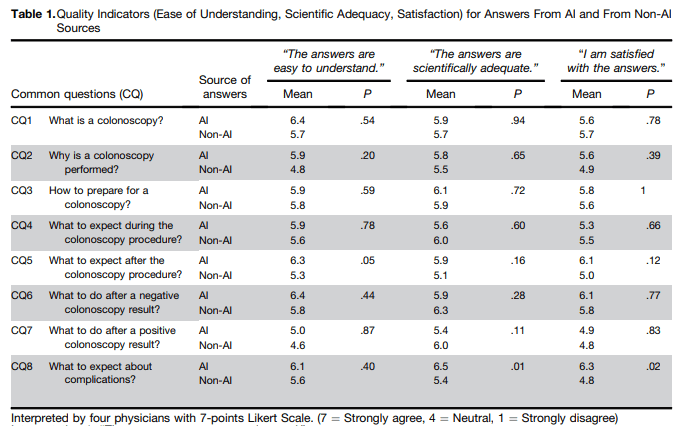

- ChatGPT4 Outperforms GI Docs for Postcolonoscopy Surveillance Advice

- Will Future Pathology Reports Include Likely Therapeutic Recommendations?

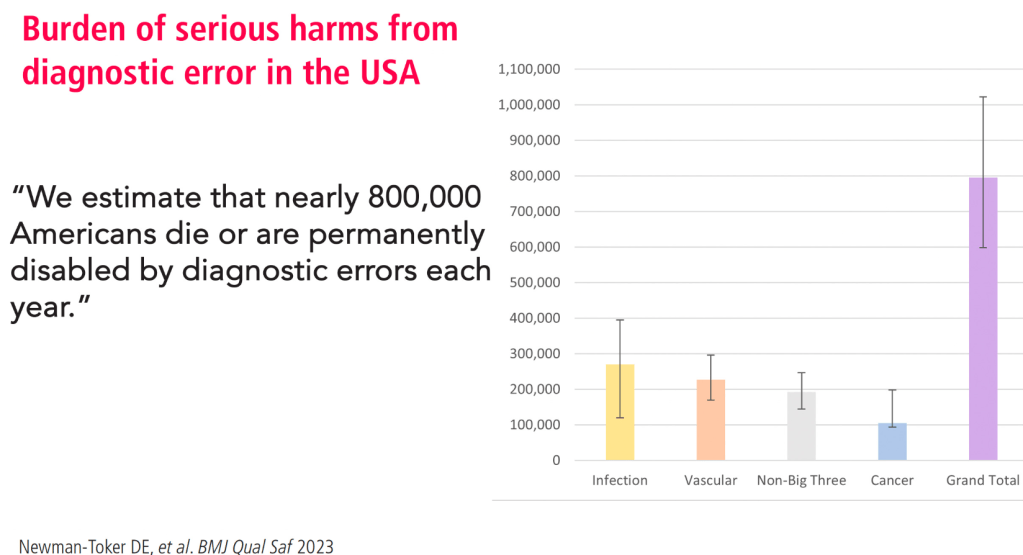

- Medical Diagnostic Errors

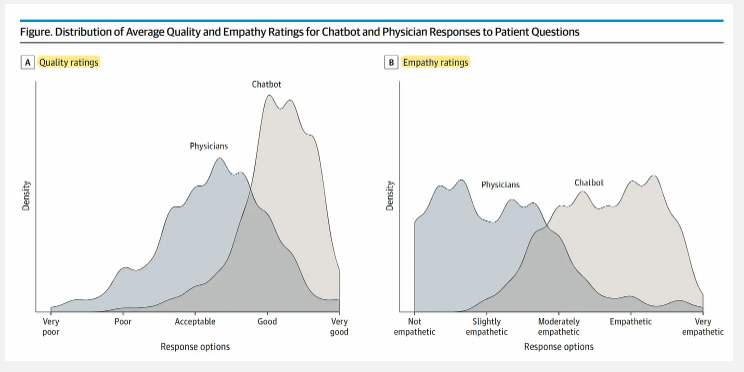

- Answering Patient Questions: AI Does Better Than Doctors

- Dr. Sana Syed: AI Advancements in Pediatric Gastroenterology

Related article: A Soroush et al. Clin Gastroenterol Hepatol 2025; 23: 1472-1476. Impact of Artificial Intelligence on the Gastroenterology Workforce and Practice